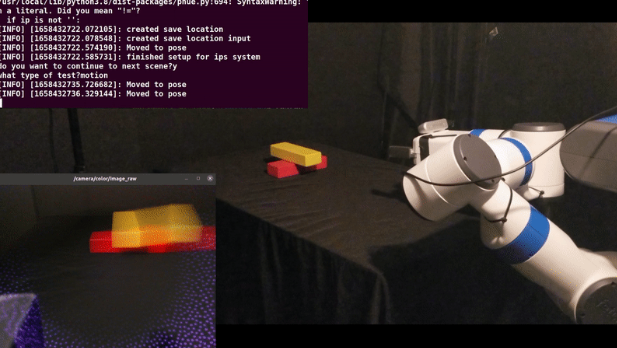

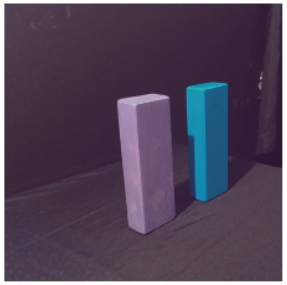

IPS Predictions: Red – Failure, Yellow – Failure

PI Predictions: Red – Not Removable, Yellow – Not Removable

Introduction

These datasets address the use case of a perception task that determines non-destructive block removal from a scene (PI). We designed a combined introspective perception system (IPS) that detects three common failure types: blur, light direction, and angle-of-view. We split these failure cases into two target areas of research: Blur Detection & Classification and Light Direction & Angle-of-View Failure Prediction. We proved our created datasets & training weights for the blur subsystem and the created datasets for the light direction & angle-of-view subsystem. These are provided for detecting and explaining failures for not just non-destructive block removal from a scene (PI) but other general perception tasks. More information on this research can be found in the thesis document linked here.

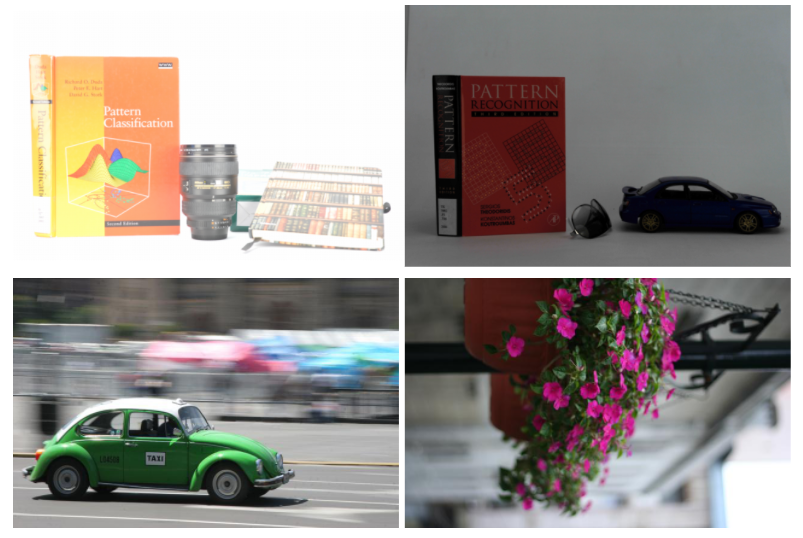

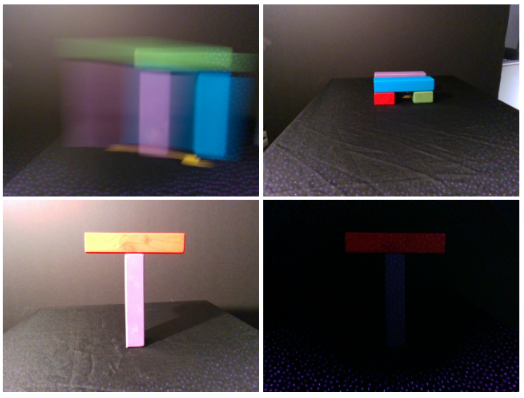

Blur Detection & Classification

The major contribution with these datasets is the addition of segmented blur with exposure. We have 5 blur classifications: No blur, Motion, Focus, Underexposure, and Overexposure. We proved four general training datasets for testing over-and underexpose real and synthetic additions. We also proved the trained weights for each of these training sets. Two tests sets are provided for the purpose of testing general blur as well as blur in the context of a pick-and-place task. For our IPS system, we collected a blur dataset and proved the real training and testing datasets with training weights. These were used in our combined IPS Blur Detection and Classification subsystem.

Code

Please download both the updated Kim et al. original model that runs on Tensorflow 2 here and the exposure addition to the model here. Please follow the readmes and extra documents for running the models.

Datasets

Real & Synthetic Exposure

https://bridge.apt.ri.cmu.edu/exposure/real_synthetic_dataset

This link above has three CUHK and PHOs combined training dataset combinations that have ground truth overlayed images with five blur types: No Blur, Motion, Focus, Underexposure, and Overexposure. It also contains one recorded exposure training blur dataset that has real over and underexposure objects that are robot pick and place scenes. Two testing datasets are included in the link above. One with real CUHK and PHOs combined blur images without overlaying and another with our recorded exposure and PHOS blur dataset for testing just exposure images. More information on these datasets can be found in the thesis document linked above.

IPS Recorded Blur

https://bridge.apt.ri.cmu.edu/exposure/ips_real_dataset

This link above has an recorded blur training dataset that is real blur ground truth overlayed images for five types: No Blur, Motion, Focus, Underexposure, and Overexposure. One testing dataset is included in the link above. More information on this dataset can be found in the thesis document linked above.

Network Weights

Real & Synthetic Exposure

https://bridge.apt.ri.cmu.edu/exposure/real_synthetic_weights

This link above has three training weights combinations that were trained on the three datasets above in the subsection Real & Synthetic Exposure. More information on these weights can be found in the thesis document linked above.

IPS Recorded Blur

https://bridge.apt.ri.cmu.edu/exposure/ips_real_weights

This link above has the weights that were trained on the recorded blur training dataset mentioned above in the dataset subsection IPS Recorded Blur. More information on these weights can be found in the thesis document linked above.

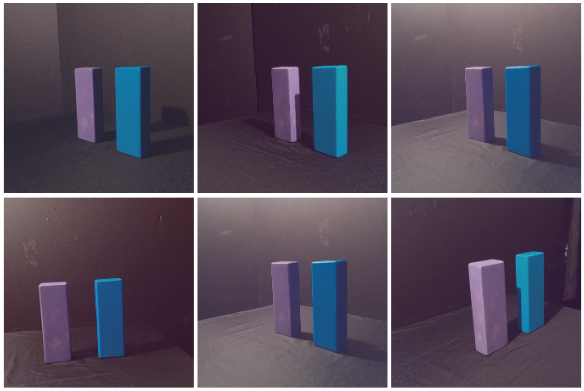

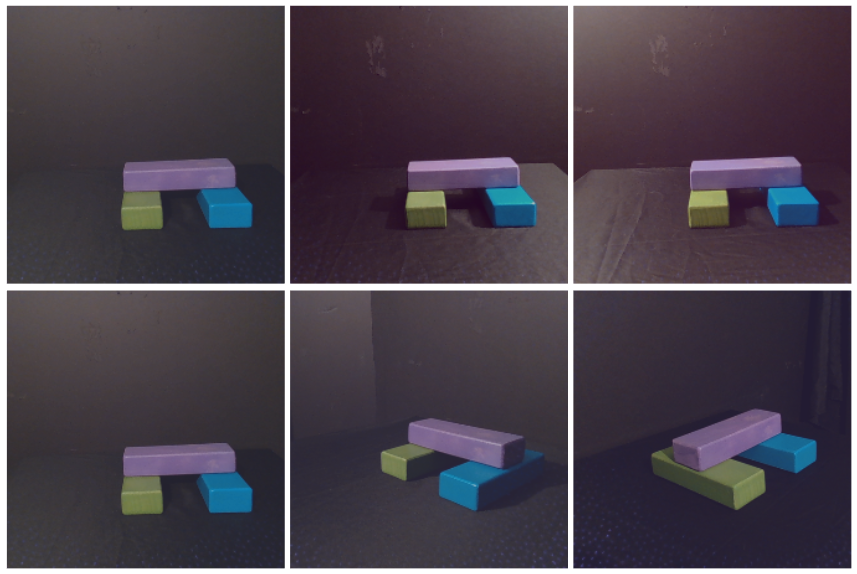

Light Direction & Angle-of-View Failure Prediction

We provide three variations of training and testing datasets that were run on a modified version of the physics intuition (PI) code referenced in Ahuja et al. Each variation is for training and testing different light directions and angles of view that are present in the training and not in testing.

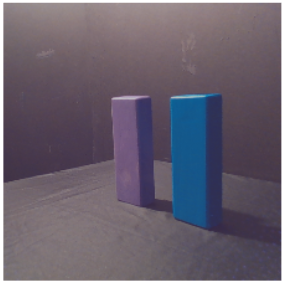

Scenario-Based Split

Training

Testing

https://bridge.apt.ri.cmu.edu/exposure/scenario_based_split_dataset

Full unique scenes where all light directions and all angles of view are included in training.Testing includes unique scenes that were not present in training where all light directions and all angles of view are included.

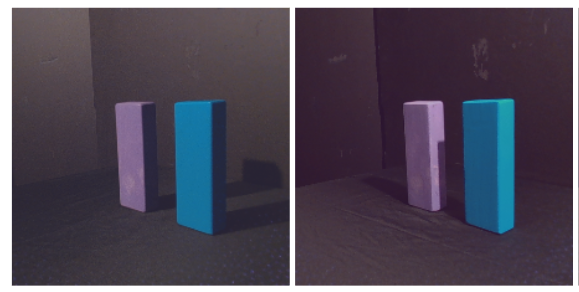

Light Direction-Based Split

Training

Testing

https://bridge.apt.ri.cmu.edu/exposure/ld_based_split_dataset

Full unique scenes where only two light directions are included in training. All angles of view are included in training. Testing includes unique scenes where only one light direction is included.

Angle-of-View-Based Split

Training

Testing

https://bridge.apt.ri.cmu.edu/exposure/aov_based_split_dataset

Full unique scenes where only two angles of view are included in training. All light directions are included in training. Testing includes unique scenes where only one angle-of-view is included.

Combined Introspection

Demo Videos (Jenga Blocks)

Motion Scenario Example:

IPS Predictions: Red – Failure, Yellow – Failure, Physics Intuition Predictions Red – Not Removable, Yellow – Not Removable, Prediction Results: Red – Incorrect, Yellow – Correct

Overexposure Scenario Example:

IPS Predictions: Red – Failure, Yellow – No Prediction, Physics Intuition Predictions Red – Not Removable, Yellow – No Prediction, Prediction Results: Red – Incorrect, Yellow – No Prediction

Underexposure Scenario Example:

IPS Predictions: Red – No Prediction, Yellow – No Prediction, Physics Intuition Predictions Red – No Prediction, Yellow – No Prediction, Prediction Results: Red – No Prediction, Yellow – No Prediction – Considered as 1 failure case

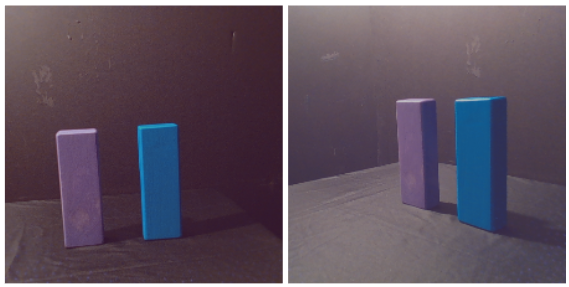

Light Direction Scenario Example:

IPS Predictions: Red – Failure, Yellow – Success, Physics Intuition Predictions Red – Removable, Yellow – Removable, Prediction Results: Red – Correct, Yellow – Correct

Angle-of-View Scenario Example:

IPS Predictions: Red – Failure, Yellow – Success, Physics Intuition Predictions Red – Not Removable, Yellow – Removable, Prediction Results: Red – Incorrect, Yellow – Correct